PTSD Research - Avoiding Misunderstanding

PTSD research can be seriously misunderstood, or even incomprehensible (yes! it's true!). Yet there are some simple tactics one can use to avoid this: know what "controlled research" is trying to do; be aware of the necessarily distorted view such research tends to offer us; know the single most important statistic you need in such research to make sense of things; know the kind of research we really need (so you can recognize it when you see it); know the limitations of each of the two sorts of language research uses; and, finally, appreciate the difference between belief and knowledge.

[caption id="attachment_1092" align="alignright" width="173" caption="Add variables! Reduce error! Know more!"] [/caption]

[/caption]

"Knowledge" means "prediction"

Psychological trauma has been known about for centuries, by a variety of names, but only recently have we had ways of looking at it that have allowed us to make good predictions. Because since we cannot see PTSD's causes directly, the only validation our ideas about PTSD can achieve is that which comes from saying "If what we think is true, then here is what should happen if we..." - and here we talk about some intervention, some alteration of environment, thought, behavior, or nervous system which we think might reduce or resolve the problem.

The very best way to scientifically examine our models is to engage in formal research, of which there there is basically two kinds: experimental, and post facto ("after the fact"). In the first type, we have a high degree of environmental control over the situation we're looking at. In the latter, we control nothing, but merely observe. When people are involved, there are certain kinds of experiments we might like to do but are prevented from doing, either because of the time periods involved, or the costs, or our ethics. That means that we do more post facto research (also called epidemiological, or cross-sectional) than we'd really like.

Science's two languages - and "control"

When we observe, we record what we see in two different languages: natural language (highly expressive, but not precise) and mathematical language (inexpressive, but very precise). Natural language is best for describing what we did to set up our observations, and mathematical language is best for recording and making sense of our observations. In fact, with math, we can achieve something like the high degree of control, in non-experimental observations, that we can in true experiments.

What is controlled is variables we do NOT want messing up our observations. Suppose you're trying to observe the natural behavior of crows in a field, when you stand, motionless, where they can see you. Sadly, you brought along with your dog. He's just not into science. His presence and behavior will dramatically change the crow behavior you're trying to observe. Solution: control the situation by leaving him home! That's the whole idea behind the notion of an experiment: eliminate ("control") unwanted variables.

Making scientific knowledge realistic and useful

It is an unfortunate and often unavoidable artifact of research that trying to understand a complex situation by removing all irrelevant variables, either by experimental design or mathematical manipulation, we create a very unnatural situation.

With luck, we WILL lay bare the influence of our variable of interest - be it relationship quality, intervention model, fee structure, color of furniture, or whether or not you serve fruit punch in your waiting room. However, looking at just one variable can easily give the impression that IT is the answer to our problem. In reality, problems are hardly ever about one variable, and the particular problem we are eventually hoping to solve won't be found in the controlled confines of an experimental situation. We deal with this characteristic distortion of reality by research design in two completely different ways.

The crucial statistic: effect size, and its dark twin - error

The first is to realize that while ideally our variable of interest (say, number of weeks of treatment offered for PTSD) will completely explain why some people recover more quickly than others, in reality it never does. Every experiment embodies a degree of failure. We have a nifty way of talking about this in research: a specific statistic called the "effect size" - a number between 0 and 1. If it's 0, then the number of weeks has NO influence on speed of recovery; if it's 1, then it completely determines the speed of recovery. In reality, it will be somewhere in between.

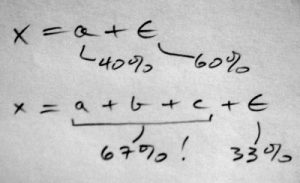

Suppose we run our experiment, and we get an "experimental effect" - number of weeks seems to matter. But how much? Our effect size turns out to be 0.40 - that's 40% of 1, and that a pretty good result for a single variable. But what about the other 60%? That's initially called simply "error", and THAT's where tomorrow's science will be done. If we want to increase our ability to determine speed of recovery - our effect size, the logical way to do that is to add variables into our experiment - things like different treatment models, length and frequency of treatment sessions, and so on. But our success will always be measured by that effect size statistic.

The research most needed: multivariate

Our second great step toward reality in research is to investigate the effect of multiple variables, operating simultaneously. To do this, we will have to change several aspects of how we set up, observe, and report our research. Still, contrary to the misleading nature of verbal descriptions, whatever we do, we will always have failure - an effect size of less than 1. There are a number of good reasons for this, but the "take-away" is simply that at best there are limits to our understanding. We understand more today than a decade ago, and all in all these are good times for psychotherapy, but in another decade things will be significantly better.

Given that our research will always embody a degree of failure, we should mistrust ANY mere verbal description of what's going on. While it can be marvelously expressive and descriptive, its ability to be accurate is limited. Our degree of success, and failure, will only ever be seen in actual measurements.

Belief is a beginning, not an end

This is a major difference between science and belief-systems. In the latter, we speak as if we simply understand. In science, we speak of "understanding" in carefully qualified terms, because if what we know matters, what we do NOT know also matters. Our understanding is always, repeat always, partial, and we are ethically bound to acknowledge that. Over time, our understanding improves.

This tends to drive people strongly committed to world views imbued with mere belief-systems fairly crazy. Belief may comfort, but it never has the power of knowledge. In science, "belief" is a source of hypotheses to be tested, not some simplistic end point. We hope to produce, from belief (hypothesis), a decent level of understanding, which will always be less than perfect.

Twenty years ago we had no effective, research validated treatment for PTSD. Now we have two well-validated models - EMDR (Eye movement desensitization and reprocessing) and PE (Prolonged Exposure), and a number of derivatives that are strong candidates for validation. Research is hard work, but it gets results. To do good research, and to use its findings, we just need to view it correctly - as it is, not as we might like to think of it. Admitting what we don't know and can't do motivates continued work, and that will give us an ever better world for victims of psychological trauma.

Connect with Tom Cloyd also at Google+, LinkedIn, Facebook, Twitter, his Sleight of Mind blog, his Trauma Psych blog, and the Tom Cloyd website.

APA Reference

Cloyd, T.

(2013, August 21). PTSD Research - Avoiding Misunderstanding, HealthyPlace. Retrieved

on 2026, March 6 from https://www.healthyplace.com/blogs/traumaptsdblog/2013/08/ptsd-research-avoiding-misunderstanding

Author: Tom Cloyd, MS, MA

Thank you so much for posting this. It's a very helpful introduction (or reminder) about what science is, how experimentation works, and the limits are of any scientific finding. "Lay readers" like myself tend to forget the inherent uncertainty or margin of error in research -- and the "popular" media's reporting on the latest developments doesn't help.

And thank YOU, Kathleen, for your interest and enthusiasm.

Ordinary thinking is like the knives we find in most kitchens - sharp enough, but not really! Thoughtful study of how scientific thinking actually works really can give you a much sharper mind. It's actually NOT useful to think one knows what one does not, or that we know more than we actually do. You seem to appreciate this.

People get irritated about the fact that in science we keep changing our minds. Well, what's the alternative? Stay with mere belief, which is actually very far from real knowledge? Hang on to old ideas just because they're familiar, when we could drop them and pick up new and better ones?

It obvious what a thoughtful person would want to do, I think. You are surely such a thoughtful person, and this will benefit you all your days, I truly believe.

Thanks again for your interest and for sharing your thoughts.

Tom